Java For Kids

Starting with the basics of Java syntax and control flow, the ebook gradually progresses to more advanced topics such as object-oriented programming, file input/output, error handling, and more. Each chapter is carefully crafted to provide a solid understanding of Java concepts while keeping young readers engaged and motivated.

Introduction:

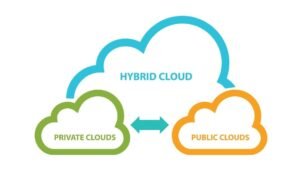

Scalability is a crucial aspect of cloud computing, enabling businesses to accommodate increasing workloads, maintain performance, and meet growing demands effectively. In today’s dynamic business environment, the ability to scale resources up or down based on demand is essential for ensuring optimal performance and cost-efficiency. In this article, we’ll explore strategies and best practices for achieving scalability in the cloud and discuss how businesses can handle growing workloads effectively.

Understanding Scalability in the Cloud:

Scalability refers to the ability of a system or application to handle increasing workload without sacrificing performance or availability. In the cloud, scalability is typically achieved through horizontal or vertical scaling:

- Horizontal Scaling (Scale-Out): Horizontal scaling involves adding more instances or resources to distribute the workload across multiple servers or instances. This approach increases capacity and allows for better load distribution and fault tolerance.

- Vertical Scaling (Scale-Up): Vertical scaling involves increasing the capacity of existing resources, such as upgrading the size or performance of a single server or instance. While vertical scaling can provide immediate capacity boosts, it may have limitations in terms of scalability and cost-effectiveness.

Strategies for Achieving Scalability in the Cloud:

- Use Auto Scaling Groups: Auto Scaling Groups (ASGs) allow you to automatically adjust the number of instances based on predefined metrics such as CPU utilization, network traffic, or custom metrics. ASGs ensure that your application can handle varying workloads efficiently by dynamically adding or removing instances as needed.

- Implement Load Balancing: Load balancers distribute incoming traffic across multiple instances or servers, ensuring optimal resource utilization and preventing overload on individual resources. By spreading the workload evenly, load balancers improve scalability, availability, and fault tolerance.

- Design for Microservices Architecture: Adopting a microservices architecture enables you to break down monolithic applications into smaller, independent services that can be scaled independently. Each microservice can be deployed and scaled individually, allowing for greater flexibility and scalability.

- Leverage Serverless Computing: Serverless computing platforms such as AWS Lambda, Azure Functions, or Google Cloud Functions provide an event-driven architecture where you only pay for the resources consumed during execution. Serverless computing eliminates the need to manage infrastructure and automatically scales based on demand, making it an ideal choice for handling unpredictable workloads.

- Utilize Distributed Databases: Distributed databases such as Amazon DynamoDB, Google Cloud Spanner, or Apache Cassandra are designed to scale horizontally across multiple nodes, allowing for high availability, scalability, and performance. Distributed databases enable you to store and access large volumes of data while maintaining consistency and reliability.

Example:

Auto Scaling with AWS EC2 Let’s consider an example of setting up Auto Scaling for an application running on Amazon EC2 instances:

- Create an Auto Scaling Group: Define an Auto Scaling Group with minimum and maximum instance counts, launch configuration, and scaling policies based on CPU utilization or other metrics.

- Configure Launch Configuration: Define a launch configuration specifying the instance type, AMI, security groups, and other settings for instances launched by the Auto Scaling Group.

- Define Scaling Policies: Create scaling policies to define how the Auto Scaling Group should scale based on metrics such as CPU utilization. For example, you can define a policy to add more instances when CPU utilization exceeds a certain threshold.

- Monitor and Adjust: Monitor your application’s performance and adjust scaling policies as needed to ensure optimal performance and cost-efficiency.

Conclusion:

Scalability is essential for businesses operating in the cloud to accommodate growing workloads, maintain performance, and meet customer demands effectively. By adopting strategies such as auto scaling, load balancing, microservices architecture, serverless computing, and distributed databases, businesses can achieve scalability in the cloud and ensure their applications can handle increasing demands efficiently. As organizations continue to embrace cloud technologies and digital transformation, scalability remains a critical factor for success in today’s competitive landscape.

My E-Book Stores Links 👇

👉 Devoog : https://devoog.com/product-category/e-books/

👉 Gumroad : https://mustaphaouaddi.gumroad.com/

👉 Payhip : https://payhip.com/CodeKidsCorner

👉 KDP : https://amzn.to/3Ju8JH6